Which LLM is the best on ETS questions?

GregMat Team•August 30, 2025 at 7:03 PM

GregMat Team•August 30, 2025 at 7:03 PMWe test different OpenAI and Google AI models to see which of them is the best for the GRE.

First off: this blog post only compares OpenAI and Gemini models. The reason is that I did not want to create extra accounts with other providers (but feel free to do it yourself or we may update the blog if there's demand). Now, the figures:

| Model | Score (V) | Score (Q) |

| o3 | 800 | 800 |

| gpt-5 | 800 | 800 |

| gpt-5-mini | 800 | 800 |

| gpt-4.1 | 740 | 620 |

| gpt-4.1-mini | 670 | 620 |

| gpt-4o | 740 | 360 |

| gpt-4o-mini | 350 | 350 |

| Gemini 2.5 Flash Lite (no thinking) | 590 | 200 |

| Gemini 2.5 Pro | 800 | 800 |

| Gemini 2.5 Flash | 780 | 800 |

| Gemini 2.0 Flash | 510 | 710 |

| Gemma 3 (27b) | 500 | 360 |

| Gemma 3 (12b) | 320 | 260 |

| Gemma 3 (4b) | 270 | 270 |

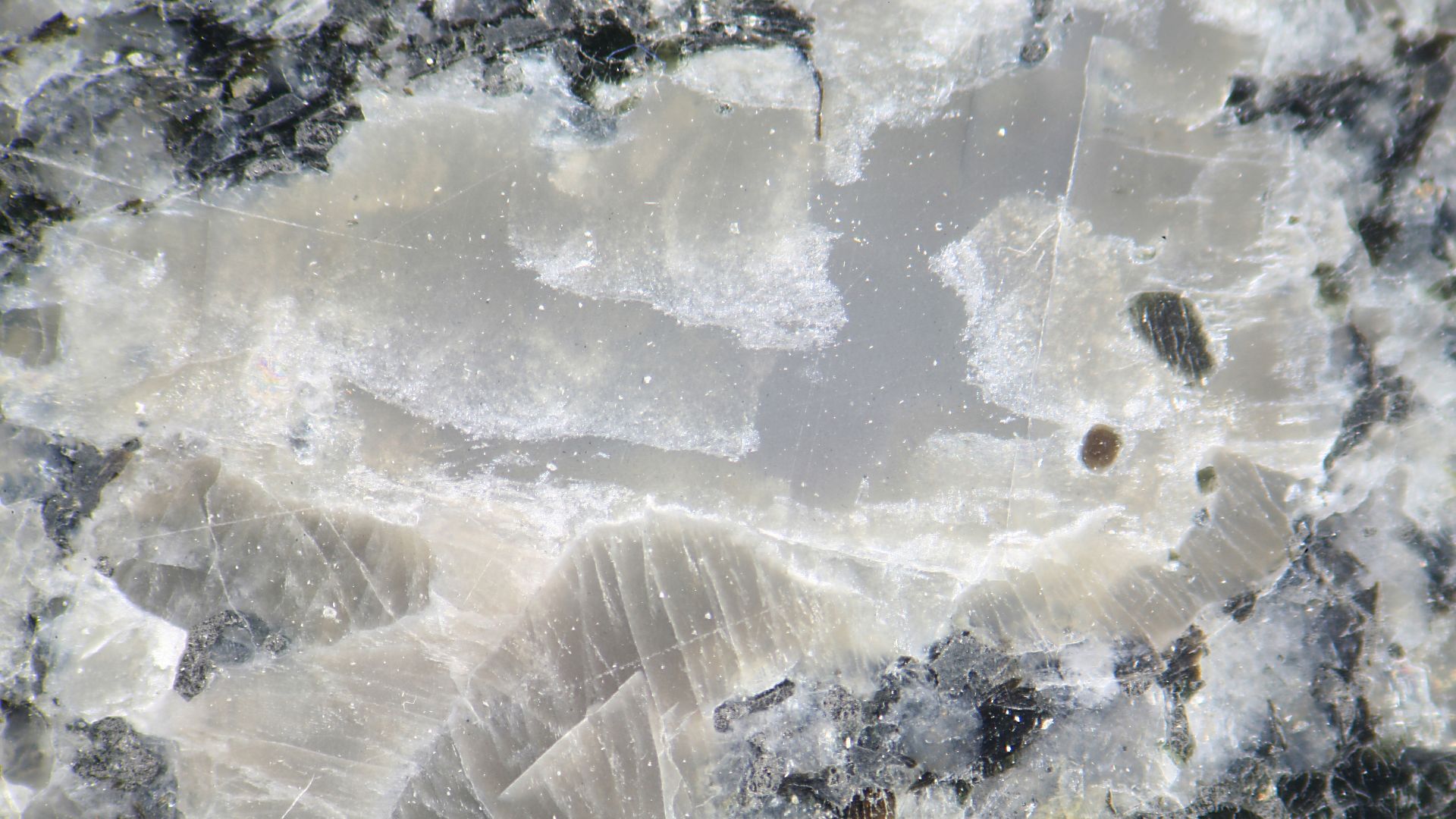

You'll notice that these scores are from 200 to 800, which is confusing since the GRE is from 130 to 170. The reason is that we used the first practice test from an old ETS PowerPrep software whose questions are not widely published on the web (because it seems to be rarely used, even amongst tutors); this way, we don't have to worry about question leakage. Here's an example of how a question from the tool looks like:

Some important notes:

- To test the LLMs, a script was written that would use the AI to solve the problem (the question was passed as an image), and then click the correct option.

- The script had some trouble with the longer RCs (and a couple of quant charts) and often would return wrong answers for them, but that didn't seem to affect the scores significantly.

- We only ran them once for each LLM, so the scores could change a bit when run multiple times.

- Like the Big Book, the old PowerPrep uses some formats not on the current GRE, such as antonyms. And that no calculators are allowed, but we couldn't tell the LLMs that...

So what would these scores look like in the current GRE? Using a conversion table, with a note that a 166Q is the highest score that can be obtained on the old GRE,

| Model | Score (V) | Score (Q) |

| o3 | 170 | 166 |

| gpt-5 | 170 | 166 |

| gpt-5-mini | 170 | 166 |

| gpt-4.1 | 169 | 149 |

| gpt-4.1-mini | 164 | 149 |

| gpt-4o | 169 | 138 |

| gpt-4o-mini | 143 | 138 |

| Gemini 2.5 Flash Lite (no thinking) | 159 | 130 |

| Gemini 2.5 Pro | 170 | 166 |

| Gemini 2.5 Flash | 170 | 166 |

| Gemini 2.0 Flash | 154 | 155 |

| Gemma 3 (27b) | 153 | 138 |

| Gemma 3 (12b) | 140 | 134 |

| Gemma 3 (4b) | 134 | 134 |

So what does this mean?

- The top models from both OpenAI and Google are very good at the GRE.

- According to ChatGPT, if you're on the free version and you hit the limits for the "main model" (GPT 5), you'll be redirected to the mini version (GPT 5 mini). This is big for GRE learners, as that model is much better at solving GRE problems, mainly because it's a hybrid thinking model. This is also why the Gemini 2.5 Flash model does pretty well. In comparison, GPT 4o-mini, which free users had to contend with just a month back, is nearly useless at the test.

- I think Copilot has better OpenAI limits and is what I would use personally over ChatGPT Free, but GPT 5 mini should be good enough in most cases.

- The open-source Google models (Gemma) flunked the GRE. Note that the open-source OpenAI model (gpt-oss) could not be tested because it does not support images.

- Just because the model got a 800 in verbal or quant does not mean that there were no errors. The curve for quant is actually surprisingly generous - we saw a 800/166Q with four wrong.